1. Install the Java Runtime environment or Install the JDK from www.java.com

2. Set the PATH environment variable for Java compiler and interpreter as

PATH=.:$PATH:"/bin"

export PATH

3. Download the jconnect(Sybase Driver) from Sybase and untar/unzip the package from http://www.sybase.com/products/middleware/jconnectforjdbc

4. You need to define two env variable CLASSPATH & JDBC_HOME

i)Set the JDBC_HOME as

[sybase@localhost jConnect-6_0]$ JDBC_HOME="/home/sybase/15.5/jConnect-6_0"

[sybase@localhost jConnect-6_0]$ export JDBC_HOME

[sybase@localhost jConnect-6_0]$ echo $JDBC_HOME

/home/sybase/15.5/jConnect-6_0

ii) Set the CLASSPATH as

[sybase@localhost jConnect-6_0]$CLASSPATH="$JDBC_HOME/classes":"/home/sybase/15.5/jConnect-6_0/classes/jconn3.jar ":.

[sybase@localhost jConnect-6_0]$ export CLASSPATH

[sybase@localhost jConnect-6_0]$ echo $CLASSPATH

.:/home/sybase/15.5/jConnect-6_0/classes/jconn3.jar:/home/sybase/15.5/jConnect-6_0/classes

5. Install the Jconnect stored procs and tables :

For jConnect to function properly, you must install stored procedures and tables on the Adaptive Server Enterprise or ASA.

Go the $JDBC_HOME/classes

java IsqlApp -U sa -P -S jdbc:sybase:Tds:localhost.localdomain:5000 -I $JDBC_HOME/sp/sql_server15.0.sql -c go

6. If installation is successful, you need to test the installation

For testing you can check using following Applet : run from $JDBC_HOME

java sample2.SybSample Version

If everything is fine, you can get the jconnect version in output window.

7. Testing Java connectivity with Sybase ASE

[sybase@localhost ~]$ cat JdbcTest.java

import java.sql.* ;

public class JdbcTest

{

public static void main( String args[] )

{

try

{

Class.forName( "com.sybase.jdbc3.jdbc.SybDriver" ) ;

Connection conn = DriverManager.getConnection( "jdbc:sybase:Tds:localhost.localdomain:5000", "sa", "") ;

Statement stmt = conn.createStatement() ;

ResultSet rs = stmt.executeQuery( "SELECT * FROM master..sysdatabases" ) ;

while( rs.next() )

System.out.println( rs.getString(1) ) ;

rs.close() ;

stmt.close() ;

conn.close() ;

}

catch( Exception e )

{ System.out.println( e ) ; }

}

}

i)Compile the code

[sybase@localhost ~]$ javac JdbcTest.java

ii)Test your code

[sybase@localhost ~]$ java JdbcTest

master

model

tempdb

sybsystemdb

sybsystemprocs

pubs2

sybsecurity

Sybase Blog -Anything About Sybase ASE,REP,IQ.

Thursday, March 24, 2011

Saturday, March 19, 2011

Happy Holi to All of you!

Happy Holi to All of you!

Friday, March 11, 2011

ASE Archive Databases for Compressed Backup.

Observation:

1. For compressed backup , only works with option : with compression=""

2. If we have taken the backup with with compression="" option, we no need to specify the the compression level during load database.

3. We can perform the dbcc on recent dump files of the databases, without hampering the performance of the prodduction database.

4. We can also object level recovery using the Archive Database.

5. For mapping the dump stipes , it makes sysaltusages table in scratch database.

Testing:

1> dump database test_db to "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2" with compression="4"

3> go

Backup Server session id is: 131. Use this value when executing the 'sp_volchanged' system stored procedure after fulfilling any

volume change request from the Backup Server.

Backup Server: 4.41.1.1: Creating new disk file /data/sybase/testing_host/dump/test_db.dmp1.

Backup Server: 4.41.1.1: Creating new disk file /data/sybase/testing_host/dump/test_db.dmp2.

Backup Server: 6.28.1.1: Dumpfile name 'sr_load1106806A97' section number 1 mounted on disk file

'/data/sybase/testing_host/dump/test_db.dmp2'

Backup Server: 6.28.1.1: Dumpfile name 'sr_load1106806A97' section number 1 mounted on disk file

'/data/sybase/testing_host/dump/test_db.dmp1'

Backup Server: 4.188.1.1: Database test_db: 11960 kilobytes (4%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 29094 kilobytes (6%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 315648 kilobytes (100%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 323870 kilobytes (100%) DUMPED.

Backup Server: 3.43.1.1: Dump phase number 1 completed.

Backup Server: 3.43.1.1: Dump phase number 2 completed.

Backup Server: 3.43.1.1: Dump phase number 3 completed.

Backup Server: 4.188.1.1: Database test_db: 323884 kilobytes (100%) DUMPED.

Backup Server: 3.42.1.1: DUMP is complete (database test_db).

1> !!ls -ltr /data/sybase/testing_host/dump/test_db.dmp*

[sh:ls -ltr /data/sybase/testing_host/dump/test_db.dmp*]

-rw-r----- 1 sybase dba 40783872 Mar 9 07:35 /data/sybase/testing_host/dump/test_db.dmp1

-rw-r----- 1 sybase dba 41261056 Mar 9 07:35 /data/sybase/testing_host/dump/test_db.dmp2

1> create database scratch on data16='100M' log on log01='100M'

2> go

CREATE DATABASE: allocating 51200 logical pages (100.0 megabytes) on disk 'data16'.

CREATE DATABASE: allocating 51200 logical pages (100.0 megabytes) on disk 'log01

Database 'scratch' is now online.

1> sp_dboption 'scratch',"scratch database", "true"

2> go

Database option 'scratch database' turned ON for database 'scratch'.

Running CHECKPOINT on database 'scratch' for option 'scratch database' to take e

(return status = 0)

1> use scratch

2> go

1> checkpoint

2> go

1> use master

2> go

1> sp_configure 'compression memory size'

2> go

Parameter Name Default Memory Used Config Value Run Value Unit

Type

------------------------------ -------------------- ----------- -------------------- -------------------- --------------------

----------

compression memory size 0 152 0 0 memory pages(2k)

dynamic

(1 row affected)

(return status = 0)

1> sp_configure 'compression memory size',64

2> go

Parameter Name Default Memory Used Config Value Run Value Unit

Type

------------------------------ -------------------- ----------- -------------------- -------------------- --------------------

----------

compression memory size 0 280 64 64 memory pages(2k)

dynamic

(1 row affected)

1> create archive database archivedb

2> on data15='50M'

3> with scratch_database = scratch

4> go

CREATE DATABASE: allocating 25600 logical pages (50.0 megabytes) on disk 'data15'.

1> load database archivedb from "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2"

3> go

1> load database archivedb from "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2"

3> go

Started estimating recovery log boundaries for database 'archivedb'.

Database 'archivedb', checkpoint=(303154, 21), first=(303154, 21), last=(303155, 6).

Completed estimating recovery log boundaries for database 'archivedb'.

Started ANALYSIS pass for database 'archivedb'.

Completed ANALYSIS pass for database 'archivedb'.

Started REDO pass for database 'archivedb'. The total number of log records to process is 11.

Redo pass of recovery has processed 2 committed and 0 aborted transactions.

Completed REDO pass for database 'archivedb'.

Use the ONLINE DATABASE command to bring this database online; ASE will not bring it online automatically.

1> online database archivedb

2> go

Started estimating recovery log boundaries for database 'archivedb'.

Database 'archivedb', checkpoint=(303154, 21), first=(303154, 21), last=(303155, 6).

Completed estimating recovery log boundaries for database 'archivedb'.

Started ANALYSIS pass for database 'archivedb'.

Completed ANALYSIS pass for database 'archivedb'.

Recovery of database 'archivedb' will undo incomplete nested top actions.

Database 'archivedb' is now online.

1> use archivedb

2> go

1> set rowcount 5

2> go

1> select name from sysobjects where type='U'

2> go

name

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

testing_FPOSDailyFunding

testing_IDeltaContracts

testing_RMSRates

testing_ESSPLFXRpt

testing_ESSAlgo

(5 rows affected)

1> set rowcount 0

2> go

1> select * from sysaltusages where 1=2

2> go

dbid location lstart size vstart vdevno segmap

------ ----------- ----------- ----------- ----------- ----------- -----------

(0 rows affected)

1> set rowcount 5

2> go

1> select * from sysaltusages

2> go

dbid location lstart size vstart vdevno segmap

------ ----------- ----------- ----------- ----------- ----------- -----------

8 4 0 256000 1 24 3

8 4 256000 51200 1 24 4

8 4 307200 256000 1 24 3

8 5 0 32 42 26 3

8 5 32 32 51 26 3

(5 rows affected)

1. For compressed backup , only works with option : with compression=""

2. If we have taken the backup with with compression="" option, we no need to specify the the compression level during load database.

3. We can perform the dbcc on recent dump files of the databases, without hampering the performance of the prodduction database.

4. We can also object level recovery using the Archive Database.

5. For mapping the dump stipes , it makes sysaltusages table in scratch database.

Testing:

1> dump database test_db to "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2" with compression="4"

3> go

Backup Server session id is: 131. Use this value when executing the 'sp_volchanged' system stored procedure after fulfilling any

volume change request from the Backup Server.

Backup Server: 4.41.1.1: Creating new disk file /data/sybase/testing_host/dump/test_db.dmp1.

Backup Server: 4.41.1.1: Creating new disk file /data/sybase/testing_host/dump/test_db.dmp2.

Backup Server: 6.28.1.1: Dumpfile name 'sr_load1106806A97' section number 1 mounted on disk file

'/data/sybase/testing_host/dump/test_db.dmp2'

Backup Server: 6.28.1.1: Dumpfile name 'sr_load1106806A97' section number 1 mounted on disk file

'/data/sybase/testing_host/dump/test_db.dmp1'

Backup Server: 4.188.1.1: Database test_db: 11960 kilobytes (4%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 29094 kilobytes (6%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 315648 kilobytes (100%) DUMPED.

Backup Server: 4.188.1.1: Database test_db: 323870 kilobytes (100%) DUMPED.

Backup Server: 3.43.1.1: Dump phase number 1 completed.

Backup Server: 3.43.1.1: Dump phase number 2 completed.

Backup Server: 3.43.1.1: Dump phase number 3 completed.

Backup Server: 4.188.1.1: Database test_db: 323884 kilobytes (100%) DUMPED.

Backup Server: 3.42.1.1: DUMP is complete (database test_db).

1> !!ls -ltr /data/sybase/testing_host/dump/test_db.dmp*

[sh:ls -ltr /data/sybase/testing_host/dump/test_db.dmp*]

-rw-r----- 1 sybase dba 40783872 Mar 9 07:35 /data/sybase/testing_host/dump/test_db.dmp1

-rw-r----- 1 sybase dba 41261056 Mar 9 07:35 /data/sybase/testing_host/dump/test_db.dmp2

1> create database scratch on data16='100M' log on log01='100M'

2> go

CREATE DATABASE: allocating 51200 logical pages (100.0 megabytes) on disk 'data16'.

CREATE DATABASE: allocating 51200 logical pages (100.0 megabytes) on disk 'log01

Database 'scratch' is now online.

1> sp_dboption 'scratch',"scratch database", "true"

2> go

Database option 'scratch database' turned ON for database 'scratch'.

Running CHECKPOINT on database 'scratch' for option 'scratch database' to take e

(return status = 0)

1> use scratch

2> go

1> checkpoint

2> go

1> use master

2> go

1> sp_configure 'compression memory size'

2> go

Parameter Name Default Memory Used Config Value Run Value Unit

Type

------------------------------ -------------------- ----------- -------------------- -------------------- --------------------

----------

compression memory size 0 152 0 0 memory pages(2k)

dynamic

(1 row affected)

(return status = 0)

1> sp_configure 'compression memory size',64

2> go

Parameter Name Default Memory Used Config Value Run Value Unit

Type

------------------------------ -------------------- ----------- -------------------- -------------------- --------------------

----------

compression memory size 0 280 64 64 memory pages(2k)

dynamic

(1 row affected)

1> create archive database archivedb

2> on data15='50M'

3> with scratch_database = scratch

4> go

CREATE DATABASE: allocating 25600 logical pages (50.0 megabytes) on disk 'data15'.

1> load database archivedb from "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2"

3> go

1> load database archivedb from "/data/sybase/testing_host/dump/test_db.dmp1"

2> stripe on "/data/sybase/testing_host/dump/test_db.dmp2"

3> go

Started estimating recovery log boundaries for database 'archivedb'.

Database 'archivedb', checkpoint=(303154, 21), first=(303154, 21), last=(303155, 6).

Completed estimating recovery log boundaries for database 'archivedb'.

Started ANALYSIS pass for database 'archivedb'.

Completed ANALYSIS pass for database 'archivedb'.

Started REDO pass for database 'archivedb'. The total number of log records to process is 11.

Redo pass of recovery has processed 2 committed and 0 aborted transactions.

Completed REDO pass for database 'archivedb'.

Use the ONLINE DATABASE command to bring this database online; ASE will not bring it online automatically.

1> online database archivedb

2> go

Started estimating recovery log boundaries for database 'archivedb'.

Database 'archivedb', checkpoint=(303154, 21), first=(303154, 21), last=(303155, 6).

Completed estimating recovery log boundaries for database 'archivedb'.

Started ANALYSIS pass for database 'archivedb'.

Completed ANALYSIS pass for database 'archivedb'.

Recovery of database 'archivedb' will undo incomplete nested top actions.

Database 'archivedb' is now online.

1> use archivedb

2> go

1> set rowcount 5

2> go

1> select name from sysobjects where type='U'

2> go

name

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

testing_FPOSDailyFunding

testing_IDeltaContracts

testing_RMSRates

testing_ESSPLFXRpt

testing_ESSAlgo

(5 rows affected)

1> set rowcount 0

2> go

1> select * from sysaltusages where 1=2

2> go

dbid location lstart size vstart vdevno segmap

------ ----------- ----------- ----------- ----------- ----------- -----------

(0 rows affected)

1> set rowcount 5

2> go

1> select * from sysaltusages

2> go

dbid location lstart size vstart vdevno segmap

------ ----------- ----------- ----------- ----------- ----------- -----------

8 4 0 256000 1 24 3

8 4 256000 51200 1 24 4

8 4 307200 256000 1 24 3

8 5 0 32 42 26 3

8 5 32 32 51 26 3

(5 rows affected)

ASE Interview Questions!

What is Identity Colum?

What is the advantage and disadvantage of Identity coloums?

From performnace point of view ,which is better if exists or if not exists?

How can we avoid fragmentation in table?

There is update statement on one APL and one DOL table. Which one would be fatser?Consider the cases: where clause on index cluster index coloum , other case not using any index.

Why the reorg is faster on DOL table as compare cluster index rebuild on APL?

Wht cluster index with sorted_data on APL is faster than reorg rebuild in DOL?

What is Sybase recommendation for tempdb size, suppose we have 300GB , 150GB dbs are inserver, wht would be the sybase recommendation for sizing of tempdb?

Whats the difference between dsysnc and direct io?

Suppose we are not concerning about the recovery of the database, which would be better for performance dsync(on/off) or direct io and why?

Whats the asynchronus prefetch ? How is it helping in performance enhance?

We having a 4k page size server, what can be possible pool size in the server?

As Sybase recommends 4K size pool for log usage in 2k page size server , please let me know the pool recommendtaion for 4K pagesize server?

How can we reduce the spinlock without partioning the data cache?

Can we have the spinlock contention with single engine?

In sysmon report what are the five segment you will be looking for performance?

Whta is meta data cache?

Whta is the archive database?

How can we enable the acrhive database for compresssed backup?

Hows the object level recovery is possible in ASE?

How can we find the culprit spid which has filled up th etempdb database?

How can we find the culprit spid which is badly used the log segment of tempdb?

Whats partioning? How partioning helping in increaeing the performance?

Suppose a table is partioned based on a coloum, how dataserver will be handle the insert on the table?

Apart from the query plans, wht else resides in proc cache?

What is new config param "optimization goal"? Whats the parameter we need to provide it?

User is experiancing very slow performace, what can be the reason for this slowness?

What is engine affinity and how can set the engine affinity?

If there are 3 cpus in the box, how many engine we can configure ?

Suppose dataserver is running very slow and sp_monitor is showing 100% cpu usages, what can be possible issue? Where will you look at?

What is the error classes in replication server?

What is the diffrence between Warm standby and table level replication?

Can you please let me know five case when the thread goes down in replication?

What are triggers? What are type of triggers and how many triggers can we configure on a table?

What are diffrecnt locking scheme in ASE and what are the latches?

How can we dump a replication queue?

What is the advantage and disadvantage of Identity coloums?

From performnace point of view ,which is better if exists or if not exists?

How can we avoid fragmentation in table?

There is update statement on one APL and one DOL table. Which one would be fatser?Consider the cases: where clause on index cluster index coloum , other case not using any index.

Why the reorg is faster on DOL table as compare cluster index rebuild on APL?

Wht cluster index with sorted_data on APL is faster than reorg rebuild in DOL?

What is Sybase recommendation for tempdb size, suppose we have 300GB , 150GB dbs are inserver, wht would be the sybase recommendation for sizing of tempdb?

Whats the difference between dsysnc and direct io?

Suppose we are not concerning about the recovery of the database, which would be better for performance dsync(on/off) or direct io and why?

Whats the asynchronus prefetch ? How is it helping in performance enhance?

We having a 4k page size server, what can be possible pool size in the server?

As Sybase recommends 4K size pool for log usage in 2k page size server , please let me know the pool recommendtaion for 4K pagesize server?

How can we reduce the spinlock without partioning the data cache?

Can we have the spinlock contention with single engine?

In sysmon report what are the five segment you will be looking for performance?

Whta is meta data cache?

Whta is the archive database?

How can we enable the acrhive database for compresssed backup?

Hows the object level recovery is possible in ASE?

How can we find the culprit spid which has filled up th etempdb database?

How can we find the culprit spid which is badly used the log segment of tempdb?

Whats partioning? How partioning helping in increaeing the performance?

Suppose a table is partioned based on a coloum, how dataserver will be handle the insert on the table?

Apart from the query plans, wht else resides in proc cache?

What is new config param "optimization goal"? Whats the parameter we need to provide it?

User is experiancing very slow performace, what can be the reason for this slowness?

What is engine affinity and how can set the engine affinity?

If there are 3 cpus in the box, how many engine we can configure ?

Suppose dataserver is running very slow and sp_monitor is showing 100% cpu usages, what can be possible issue? Where will you look at?

What is the error classes in replication server?

What is the diffrence between Warm standby and table level replication?

Can you please let me know five case when the thread goes down in replication?

What are triggers? What are type of triggers and how many triggers can we configure on a table?

What are diffrecnt locking scheme in ASE and what are the latches?

How can we dump a replication queue?

Friday, March 4, 2011

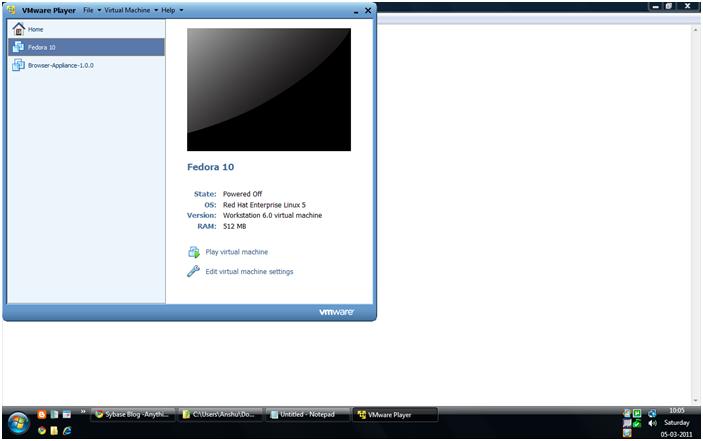

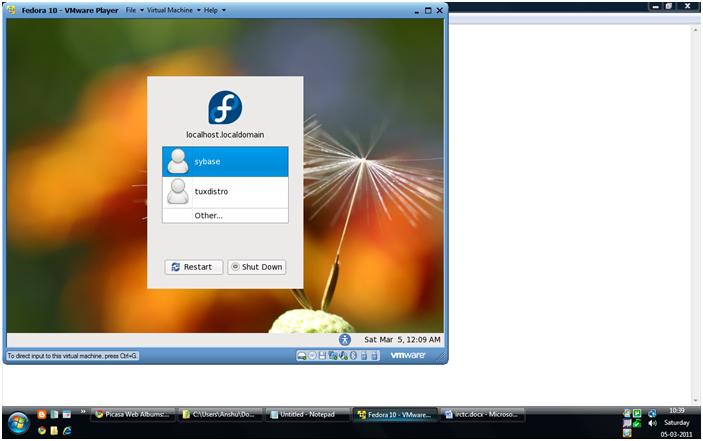

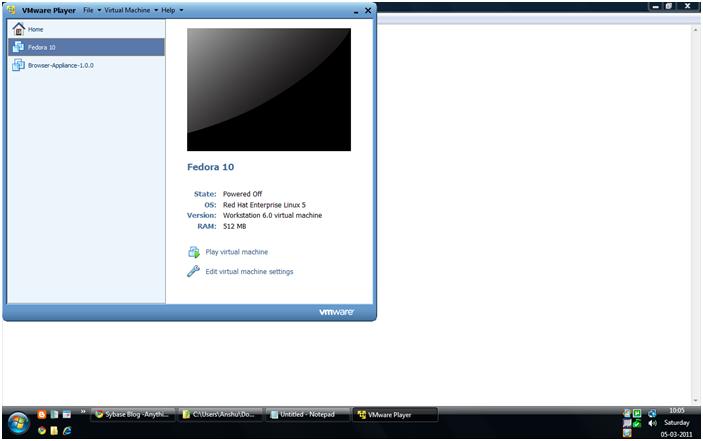

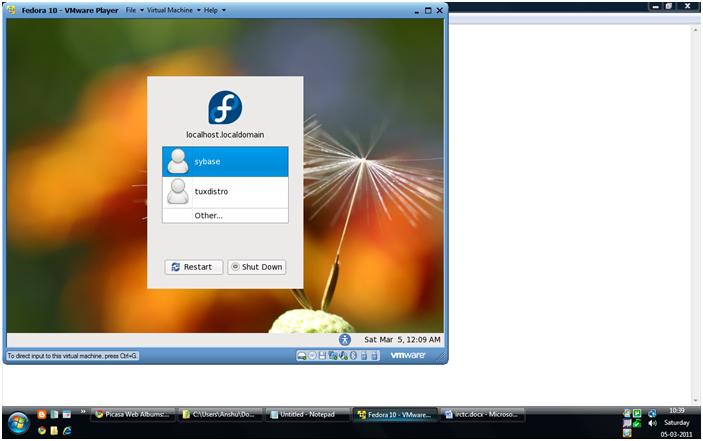

ASE Installation on Linux : Using Fedora Vmware Machine on Windows

1. Download the free vmware player from the vmware website: www.vmware.com/download/player/

2. Install the vmplayer which you have downloaded above.

3. Download the virtual appliance for Fedora 10 OS. http://www.vmware.com/appliances/directory/59370

4. Unzip your vitual appliance.

5. After unzip, you need to start Fedora.10.vmx file. It will start your guest OS.

For Sybase Installation:

6. Crete the sybase user and adduser in sybase group.

7. For installation of ASE on Linux requires 64MB shared memory. So modify the /etc/sysctl.conf with kernal.shmmax euqls to more than 64 MB.

8. Run sysctl -p /etc/sysctl.conf to take place the kernel level setting.

9. Now login with sybase account, down load or copy the ASE software from Sybase website and start as below.

10. Please see the below slide show for further installation:

[slideshow]

2. Install the vmplayer which you have downloaded above.

3. Download the virtual appliance for Fedora 10 OS. http://www.vmware.com/appliances/directory/59370

4. Unzip your vitual appliance.

5. After unzip, you need to start Fedora.10.vmx file. It will start your guest OS.

For Sybase Installation:

6. Crete the sybase user and adduser in sybase group.

7. For installation of ASE on Linux requires 64MB shared memory. So modify the /etc/sysctl.conf with kernal.shmmax euqls to more than 64 MB.

8. Run sysctl -p /etc/sysctl.conf to take place the kernel level setting.

9. Now login with sybase account, down load or copy the ASE software from Sybase website and start as below.

10. Please see the below slide show for further installation:

[slideshow]

Thursday, February 24, 2011

Load database: A Recurring Issue!

Hi Guys,

As we already aware for loading a database , there cann't be any user online in the DB.

In our env, we are killing these spids before actual database load in script.

Sometime it works, sometime fails as clinet ids logged in so frequently in server so during the actual load statement database again comes in use status.

This is very frequent issue which I have seen in many enviorment where clined ids logged in very rapidally.

For that we using the some alternative as locking the ids manually and once the load starts we unlock.( We cannt wait untill load finish for unlock of ids) . This task required the manual intervention.

I would like to raise a question, why sybase cann't provide the load database with kill option, beacuse any above such alternative would not be 100% effective as killing the spids ( by any way) and loading the database is two diffrent task. Between both task execution, there must be some time gap may be in millsecond, and that time gap is suffcient for any login id to logged ins.

One more option can be :

We can make database in offline state( something like that so no one can use it) before load database, aftre that we will go for load database.

Kindly let me know your thoughts and suggest if any thing I am missing above.

I have posted the same in various forums as well, will update you if I'll get any response.

till then..

bye

As we already aware for loading a database , there cann't be any user online in the DB.

In our env, we are killing these spids before actual database load in script.

Sometime it works, sometime fails as clinet ids logged in so frequently in server so during the actual load statement database again comes in use status.

This is very frequent issue which I have seen in many enviorment where clined ids logged in very rapidally.

For that we using the some alternative as locking the ids manually and once the load starts we unlock.( We cannt wait untill load finish for unlock of ids) . This task required the manual intervention.

I would like to raise a question, why sybase cann't provide the load database with kill option, beacuse any above such alternative would not be 100% effective as killing the spids ( by any way) and loading the database is two diffrent task. Between both task execution, there must be some time gap may be in millsecond, and that time gap is suffcient for any login id to logged ins.

One more option can be :

We can make database in offline state( something like that so no one can use it) before load database, aftre that we will go for load database.

Kindly let me know your thoughts and suggest if any thing I am missing above.

I have posted the same in various forums as well, will update you if I'll get any response.

till then..

bye

Monday, February 21, 2011

Sybase Interview Ques Updated!!

Guys,

Updated the Sybase ASE/Rep Interview Question Page which I came across recently.

Please have a look @ http://sybaseblog.com/interviewquestions/

Happy Learning !!

sybanva

Updated the Sybase ASE/Rep Interview Question Page which I came across recently.

Please have a look @ http://sybaseblog.com/interviewquestions/

Happy Learning !!

sybanva

Sunday, February 6, 2011

Default,Rules and User Defined Datatype

Question

=======

Consider a table creation script

create table table1(id int not null,

cost smallmoney default $10

)

will it allow null value in column "cost"?

Answer

======

No

Source (Unleashed)

Question

=======

How to find all tables and columns which are bind with a rule/default?

Answer

======

select "table"=o.name, "column"=c.name,"user"=user_name(uid),"rule"=object_name(domain)

from sysobjects o,syscolumns c

where o.id=c.id

and object_name(c.domain)='rule/default name'

Sequence of events when using datatypes

=================================

sp_addtype ssn_type, 'char(9)', 'not null'

go

create rule ssn_rule as

@ssn between '001111' and '1111111'

or @ssn = 'N/A'

go

create default ssn_default as

'N/A'

sp_bindrule ssn_rule,ssn_type

sp_bindefault ssn_default,ssn_type

create table ssn_table

(ssn ssn_type, name varchar(30))

go

==================

A rule or default bound explicitly to a column override a rule or default bound to a datatype. A subsequent bind to the datatype replaces the bind to the column as long as the column and datatype have the same rule or default prior to modification.

=======

Consider a table creation script

create table table1(id int not null,

cost smallmoney default $10

)

will it allow null value in column "cost"?

Answer

======

No

Source (Unleashed)

Question

=======

How to find all tables and columns which are bind with a rule/default?

Answer

======

select "table"=o.name, "column"=c.name,"user"=user_name(uid),"rule"=object_name(domain)

from sysobjects o,syscolumns c

where o.id=c.id

and object_name(c.domain)='rule/default name'

Sequence of events when using datatypes

=================================

sp_addtype ssn_type, 'char(9)', 'not null'

go

create rule ssn_rule as

@ssn between '001111' and '1111111'

or @ssn = 'N/A'

go

create default ssn_default as

'N/A'

sp_bindrule ssn_rule,ssn_type

sp_bindefault ssn_default,ssn_type

create table ssn_table

(ssn ssn_type, name varchar(30))

go

==================

A rule or default bound explicitly to a column override a rule or default bound to a datatype. A subsequent bind to the datatype replaces the bind to the column as long as the column and datatype have the same rule or default prior to modification.

Renaming Objects

(Source - Unleashed)

Question

=======

If name of table is changed then stored procedure and views would work or will change to invalid state?

Answer

======

Even though the name of table has changed, objects like views and procedures that refer to that table by name are not affected by the change in the name. That is because SQL-based objects like Views and Procedures are stored both as text in the syscomments table and pre-parsed query tree identifying related objects by ID instead of Name. When a table name changes, a dependent view still works because the objectID of the table (stored in sysobjects) does not change.

Question

=======

If name of table is changed then stored procedure and views would work or will change to invalid state?

Answer

======

Even though the name of table has changed, objects like views and procedures that refer to that table by name are not affected by the change in the name. That is because SQL-based objects like Views and Procedures are stored both as text in the syscomments table and pre-parsed query tree identifying related objects by ID instead of Name. When a table name changes, a dependent view still works because the objectID of the table (stored in sysobjects) does not change.

Tuesday, February 1, 2011

Performance Tuning – Scenario Based

Suppose we have a join order as below in our query

#tmp > Table1 > Table2>

but query plan would be making join order as below

Table1 > Table2 > #tmp

and it would be causing a whole index scan on both the tables Table1 and Table2 (which is as per the people expectation) before we use temp table to filter out results. However, still we can get performance improvement.

Solution --> We can use "set forceplan on" to force the join order. Though we will have table scan on Table1 and Table2 because of "forcing", we still could get the performance improvement by avoiding index scan on the large tables Table1 and Table2 because table scan on less number of records (due to filteration by #tmp table) can be faster than index scan on large number of records.

#tmp > Table1 > Table2>

but query plan would be making join order as below

Table1 > Table2 > #tmp

and it would be causing a whole index scan on both the tables Table1 and Table2 (which is as per the people expectation) before we use temp table to filter out results. However, still we can get performance improvement.

Solution --> We can use "set forceplan on" to force the join order. Though we will have table scan on Table1 and Table2 because of "forcing", we still could get the performance improvement by avoiding index scan on the large tables Table1 and Table2 because table scan on less number of records (due to filteration by #tmp table) can be faster than index scan on large number of records.

Friday, January 28, 2011

Joins Algorithms

Source : sybase.com and www

Time Complexity of Nested Loop Join Algo : O(N * M)

Time Complexity of a HASH JOIN is O(N + M), where N is the hashed table and M the is lookup table. Hashing and hash lookups have constant complexity.

Time Complexity of a MERGE JOIN is O(N*Log(N) + M*Log(M)): it's the sum of times to sort both tables plus time to scan them.

Hash Join Algo

====================

The hash join algorithm builds an in-memory hash table of the smaller of its two inputs, and then reads the larger input and probes the in-memory hash table to find matches, which are written to a work table. If the smaller input does not fit into memory, the hash join operator partitions both inputs into smaller work tables. These smaller work tables are processed recursively until the smaller input fits into memory.

The hash join algorithm has the best performance if the smaller input fits into memory, regardless of the size of the larger input. In general, the optimizer will choose hash join if one of the inputs is expected to be substantially smaller than the other.

Merge Join Algo

==================

The merge join algorithm reads two inputs which are both ordered by the join attributes. For each row of the left input, the algorithm reads all of the matching rows of the right input by accessing the rows in sorted order.

If the inputs are not already ordered by the join attributes (perhaps because of an earlier merge join or because an index was used to satisfy a search condition), then the optimizer adds a sort to produce the correct row order. This sort adds cost to the merge join.

One advantage of a merge join compared to a hash join is that the cost of sorting can be amortized over several joins, provided that the merge joins are over the same attributes. The optimizer will choose merge join over a hash join if the sizes of the inputs are likely to be similar, or if it can amortize the cost of the sort over several operations.

Nested Loops Join Algo

==================

The nested loops join computes the join of its left and right sides by completely reading the right hand side for each row of the left hand side. (The syntactic order of tables in the query does not matter, because the optimizer chooses the appropriate join order for each block in the request.)

The optimizer may choose nested loops join if the join condition does not contain an equality condition, or if it is optimizing for first-row time.

Since a nested loops join reads the right hand side many times, it is very sensitive to the cost of the right hand side. If the right hand side is an index scan or a small table, then the right hand side can likely be computed using cached pages from previous iterations. On the other hand, if the right hand side is a sequential table scan or an index scan that matches many rows, then the right hand side needs to be read from disk many times. Typically, a nested loops join is less efficient than other join methods. However, nested loops join can provide the first matching row quickly compared to join methods that must compute their entire result before returning.

Nested loops join is the only join algorithm that can provide sensitive semantics for queries containing joins. This means that sensitive cursors on joins can only be executed with a nested loops join.

Time Complexity of Nested Loop Join Algo : O(N * M)

Time Complexity of a HASH JOIN is O(N + M), where N is the hashed table and M the is lookup table. Hashing and hash lookups have constant complexity.

Time Complexity of a MERGE JOIN is O(N*Log(N) + M*Log(M)): it's the sum of times to sort both tables plus time to scan them.

Hash Join Algo

====================

The hash join algorithm builds an in-memory hash table of the smaller of its two inputs, and then reads the larger input and probes the in-memory hash table to find matches, which are written to a work table. If the smaller input does not fit into memory, the hash join operator partitions both inputs into smaller work tables. These smaller work tables are processed recursively until the smaller input fits into memory.

The hash join algorithm has the best performance if the smaller input fits into memory, regardless of the size of the larger input. In general, the optimizer will choose hash join if one of the inputs is expected to be substantially smaller than the other.

Merge Join Algo

==================

The merge join algorithm reads two inputs which are both ordered by the join attributes. For each row of the left input, the algorithm reads all of the matching rows of the right input by accessing the rows in sorted order.

If the inputs are not already ordered by the join attributes (perhaps because of an earlier merge join or because an index was used to satisfy a search condition), then the optimizer adds a sort to produce the correct row order. This sort adds cost to the merge join.

One advantage of a merge join compared to a hash join is that the cost of sorting can be amortized over several joins, provided that the merge joins are over the same attributes. The optimizer will choose merge join over a hash join if the sizes of the inputs are likely to be similar, or if it can amortize the cost of the sort over several operations.

Nested Loops Join Algo

==================

The nested loops join computes the join of its left and right sides by completely reading the right hand side for each row of the left hand side. (The syntactic order of tables in the query does not matter, because the optimizer chooses the appropriate join order for each block in the request.)

The optimizer may choose nested loops join if the join condition does not contain an equality condition, or if it is optimizing for first-row time.

Since a nested loops join reads the right hand side many times, it is very sensitive to the cost of the right hand side. If the right hand side is an index scan or a small table, then the right hand side can likely be computed using cached pages from previous iterations. On the other hand, if the right hand side is a sequential table scan or an index scan that matches many rows, then the right hand side needs to be read from disk many times. Typically, a nested loops join is less efficient than other join methods. However, nested loops join can provide the first matching row quickly compared to join methods that must compute their entire result before returning.

Nested loops join is the only join algorithm that can provide sensitive semantics for queries containing joins. This means that sensitive cursors on joins can only be executed with a nested loops join.

Tuesday, January 25, 2011

Data Explosion : R V Ready?

We have social networking profiles like orkut, facebook, Linkedin & many more online application.

Have you relized that last 5 years how many profiles have been created ? The number is so big.

Have you relized how much data is stored behind these profiles?

In india, we started using these profile from our generation only. Think the number of user after two generation and the amount of data due to that.

When we are making a call , when we do shopping, when we do banking, when we accessing social network site and making new profile, we are only creating data.

Do you think, in a sinlge minute how many people do the calls and how many people makes their new profile?

Every minutes we are increasing data.

What you think, we will not crosss tera-peta byte databases very soon?

We will must cross, for that do our existing technologies are capable enough? I cant say yes.

Second thing, it would be very tough to decide the obsolete data,to purge our databases. Isnt it?

We are now started creating the data very fast, We will certainly need to enhance our technologies, may be

we need to think beyond our existing DB technologies.

Whats your thoughts?

Have you relized that last 5 years how many profiles have been created ? The number is so big.

Have you relized how much data is stored behind these profiles?

In india, we started using these profile from our generation only. Think the number of user after two generation and the amount of data due to that.

When we are making a call , when we do shopping, when we do banking, when we accessing social network site and making new profile, we are only creating data.

Do you think, in a sinlge minute how many people do the calls and how many people makes their new profile?

Every minutes we are increasing data.

What you think, we will not crosss tera-peta byte databases very soon?

We will must cross, for that do our existing technologies are capable enough? I cant say yes.

Second thing, it would be very tough to decide the obsolete data,to purge our databases. Isnt it?

We are now started creating the data very fast, We will certainly need to enhance our technologies, may be

we need to think beyond our existing DB technologies.

Whats your thoughts?

Thursday, January 13, 2011

Performance Tuning - Scenario Based

Scenario 1

------------

If an application makes multiple statement and each statement calls to a specific stored procedure. Thereby, being a multithreaded application it will call stored procedure multiple times at a time. It has been noticed that for some calls procedure takes longer time for different set of parameters. What could be the issue?

Solution 1

-----------

First we can’t blame sqarely on stored procedure for the slower performance. We have few options here

1. Reduce the number of concurrent calls to stored procedure.

2. Check whether the procedure is calling any child procedure with input parameter or not. Suppose child procedure is looking for location and if parent procedure is passing location as an input parameter and while the absence of this input parameter child procedure might be processing all available locations, which could be in huge number, and consuming time. So before calling the child procedure, apply proper check which would reduce the time consumptions for remaining calls.

------------

If an application makes multiple statement and each statement calls to a specific stored procedure. Thereby, being a multithreaded application it will call stored procedure multiple times at a time. It has been noticed that for some calls procedure takes longer time for different set of parameters. What could be the issue?

Solution 1

-----------

First we can’t blame sqarely on stored procedure for the slower performance. We have few options here

1. Reduce the number of concurrent calls to stored procedure.

2. Check whether the procedure is calling any child procedure with input parameter or not. Suppose child procedure is looking for location and if parent procedure is passing location as an input parameter and while the absence of this input parameter child procedure might be processing all available locations, which could be in huge number, and consuming time. So before calling the child procedure, apply proper check which would reduce the time consumptions for remaining calls.

Wednesday, January 12, 2011

Sparc T4 will have better single-thread performance :important for large databases and ERP applications.

Source :: http://www.pcworld.idg.com.au/article/370535/oracle_halve_core_count_next_sparc_processor/

The Sparc T4 will have better single-thread performance instead, which is important for large databases and ERP applications.

The Sparc T4 will have better single-thread performance instead, which is important for large databases and ERP applications.

Chips with high core counts tend to be better at some workloads than others. They are good at jobs that can be broken into many smaller parts, such as processing high volumes of Web requests and online transactions, but fare worse at big databases and ERP applications, where single-thread performance is important

Sunday, January 2, 2011

2010 in review - By Wordpress.

By Wordpress

===============

The stats helper monkeys at WordPress.com mulled over how this blog did in 2010, and here's a high level summary of its overall blog health:

The Blog-Health-o-Meter™ reads This blog is doing awesome!.

A helper monkey made this abstract painting, inspired by your stats.

A Boeing 747-400 passenger jet can hold 416 passengers. This blog was viewed about 7,400 times in 2010. That's about 18 full 747s.

In 2010, there were 25 new posts, growing the total archive of this blog to 32 posts. There were 2 pictures uploaded, taking up a total of 7kb.

The busiest day of the year was April 7th with 72 views. The most popular post that day was ASE/REP/IQ Interview Ques.

The top referring sites in 2010 were sybaseteam.com, google.co.in, en.wordpress.com, facebook.com, and google.com.

Some visitors came searching, mostly for sybase iq interview questions, sybase blog, sybase ase interview questions, sybase, and sybase replication server interview questions.

These are the posts and pages that got the most views in 2010.

ASE/REP/IQ Interview Ques February 2010

2 comments

Cleaning Shared Memory Segment and semaphore, for the ASE startup.. January 2010

2 comments

ASE15 New Features - I: DBA Perspective December 2009

directio,dsync & sync , async IO April 2010

2 comments

Dataserver Health Check Script March 2010

===============

The stats helper monkeys at WordPress.com mulled over how this blog did in 2010, and here's a high level summary of its overall blog health:

The Blog-Health-o-Meter™ reads This blog is doing awesome!.

Crunchy numbers

A helper monkey made this abstract painting, inspired by your stats.

A Boeing 747-400 passenger jet can hold 416 passengers. This blog was viewed about 7,400 times in 2010. That's about 18 full 747s.

In 2010, there were 25 new posts, growing the total archive of this blog to 32 posts. There were 2 pictures uploaded, taking up a total of 7kb.

The busiest day of the year was April 7th with 72 views. The most popular post that day was ASE/REP/IQ Interview Ques.

Where did they come from?

The top referring sites in 2010 were sybaseteam.com, google.co.in, en.wordpress.com, facebook.com, and google.com.

Some visitors came searching, mostly for sybase iq interview questions, sybase blog, sybase ase interview questions, sybase, and sybase replication server interview questions.

Attractions in 2010

These are the posts and pages that got the most views in 2010.

1

ASE/REP/IQ Interview Ques February 2010

2 comments

2

Cleaning Shared Memory Segment and semaphore, for the ASE startup.. January 2010

2 comments

3

ASE15 New Features - I: DBA Perspective December 2009

4

directio,dsync & sync , async IO April 2010

2 comments

5

Dataserver Health Check Script March 2010

Saturday, January 1, 2011

Subscribe to:

Posts (Atom)